K. Gwet's Inter-Rater Reliability Blog : Benchmarking Agreement CoefficientsInter-rater reliability: Cohen kappa, Gwet AC1/AC2, Krippendorff Alpha

Interrater agreement and interrater reliability: Key concepts, approaches, and applications - ScienceDirect

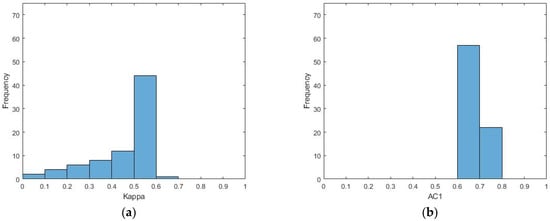

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

Using appropriate Kappa statistic in evaluating inter-rater reliability. Short communication on “Groundwater vulnerability and contamination risk mapping of semi-arid Totko river basin, India using GIS-based DRASTIC model and AHP techniques ...

The Equivalence of Weighted Kappa and the Intraclass Correlation Coefficient as Measures of Reliability - Joseph L. Fleiss, Jacob Cohen, 1973

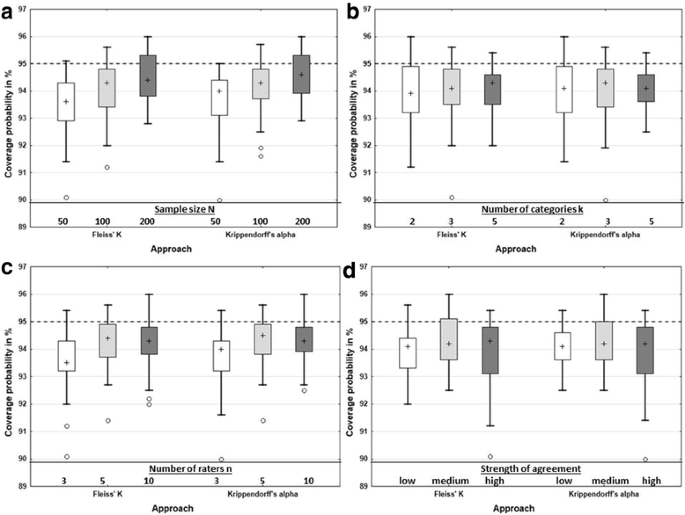

Measuring inter-rater reliability for nominal data – which coefficients and confidence intervals are appropriate? | BMC Medical Research Methodology | Full Text

Generalized Cohen's Kappa: A Novel Inter-rater Reliability Metric for Non-mutually Exclusive Categories | SpringerLink

Percentage agreement and Cohen's Kappa measure of inter- rater reliability | Download Scientific Diagram